This lesson presents performance enhancement tools for your switching infrastructure in the face of extreme bandwidth requirements. They include micro segmentation via collision domains, the use of full-duplex communications, and different media rates like Gigabit Ethernet and 10 Gigabit Ethernet. We will also described spanning tree as a way to detect and prevent loops.

Microsegmentation

One of the key differentiators of the switch is its ability to perform microsegmentation. This has nothing to do with its ability to intelligently switch packets only on the ports where the destination is located. This has to do with the ability to break the connected machines into multiple collision domains. In the old days, a hub was one collision domain and that meant that all the machines connected to the hub would see each other as soon as they try to transmit.

So you could say that only one could transmit at any point in time; the other ones would sense a channel and retract and try again later according to the rules of Carrier Sense Multiple Access Collision Detection, the rules that govern Ethernet. In the case of a switch, each port is a collision domain. So you could say that there are no collisions in a switched environment. Also, the switch has what is known as a switching fabric or an internal component that allows multiple conversations to take place at the same time. For those two things need to happen, each port needs to be a collision domain and the switching fabric allow multiple conversations at the same time.

This is similar to each car commuting to work having its own on-ramp access to the highway and then the highway having multiple links to allow multiple conversations or cars going on at the same time. The amount of switching fabric defines the number of concurrent conversations that the switch can have, so the bigger the better. The switching fabric is measured in bits per second and some of today’s switches can get up to Gb/s and even Tb/s in the switching fabric.

Duplex Overview

Full-duplex communications are another differentiator of switches; it was used to resolve bandwidth issues. In full-duplex communication, for example, between the server and a switch, effective bandwidth is increased by allowing the devices to send and receive at the same time at the same bandwidth. In other words, if I have a 100 Mb/s link at full-duplex, then my effective bandwidth available is actually 200 Mb/s. This is on point-to-point connections only. In other words, if you wanted to connect a hub to a switch that would be considered point-to-multipoint because the hub needs to determine and understand collisions, half-duplex communications are required in order to understand collisions.

At that point, you have a unidirectional data flow and a high potential for collisions, but in a hub environment, you will have collisions any ways. One of the channels will be used to transmit or receive; the other channel will be used to sense the collision. Full-duplex is effectively a collision free mechanism and it is based on and assumes the existence of a microsegmentation environment. You could say that an analogy is a walkie-talkie conversation, which is similar to half-duplex. If you speak, you have to stop speaking in order for the other side to speak. A full-duplex conversation would be similar to a telephone conversation in which both sides can speak at the same time.

Setting Duplex and Speed Options

You can change the duplex and speed settings by using these commands. Going into interface configuration mode, you can use the duplex command to create a static configuration either to half or to full-duplex. Using the auto keyword, you would have both sides negotiating the best approach. The speed command is also an interface configuration type command and you can specify the particular speed that you want. Make it static to 10, 100, or 1000 Mb/s or also let it auto negotiate with the auto keyword. The default duplex for a Fast Ethernet and a 10/100/1000 port is to auto negotiate, but the default for Gb/s ports on fiber connections is full-duplex.

These settings can be shown using the show interfaces command. Whether statically set or auto negotiated, the duplex configuration and status will show here and so will the speed settings. It is worth mentioning that autonegotiation sometimes will fail. In Cisco switches, if the negotiation fails, then the port will be set to half-duplex mode, which may not go in accordance to the connecting device. To mitigate the situation, you should set your duplex settings statically.

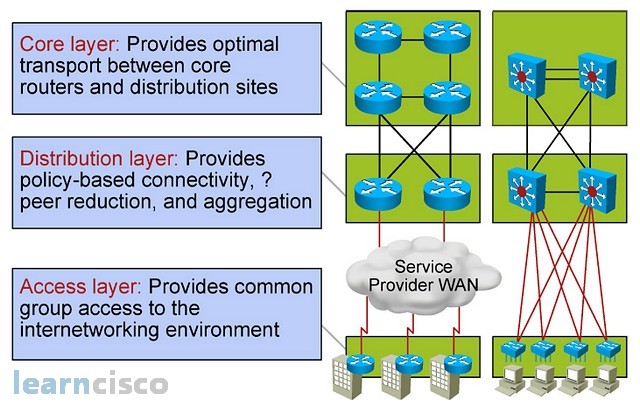

The Hierarchy of Connectivity. Three layer model

When you think of speed and bandwidth and think of 10 Gb/s as a reality today even for servers and endpoints, it would be ideal to be able to provide all these 10 Gb/s links all over the network; however, that would be cost prohibitive. One design approach is to break the network into areas or sections and allocate bandwidth according to the needs of each section. Traditional design models call for modularizing the network and that is important but also for creating hierarchical modules. The “core distribution access” model calls for an access layer that provides connectivity to endpoints and then allocates bandwidth and other features and functions according to the connecting endpoints. For example, a server farm would be connected to an access layer and you would probably see a lot more of the Gb/s or even 10 Gb/s links there; however, a building acces layer would contain more of the 100 Mb/s or Gb/s speeds. The distribution layer aggregates multiple access blocks and terminates the uplinks coming from access layer switches.

Here is where you may do oversubscription of bandwidth, knowing that not all of the access blocks or not all of the machines are going to be transmitting at the same time. The core layer would be connecting multiple distribution blocks or modules and here is where most of the traffic in the network will be transported, especially knowing that in today’s networks, most of the resources are outside of access blocks and if you think of the Internet as a resource and centrally located server farms, then that would be the case.

Bandwidth is not the only function or resource allocated through this model. You can see here how perhaps security is pushed toward the acces layer to catch the bad guys before they come into the network. Most of the policy-based connectivity and functions like firewalling and quality of service are allocated in the distribution layer and the core layer ends up being a fast, fast, fast switch network to transport most of the traffic in the network.

Loops

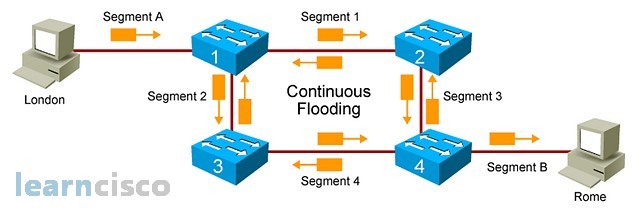

In building the hierarchy, you will probably build some redundancy in some of the modules, for example, the core layer requires a certain level of redundancy and reliability because again it will transport most of the traffic in the network, especially that traffic going outside of distribution blocks or modules. When you create redundancy, you will probably create switching loops like those shown in the figure. Loops are a good idea if you think of a redundancy and reliability and availability. If you have a loop like this one, then a segment or link going down will not represent a single point of failure because traffic will find its way around the failure and into the intended destination.

Traffic directed to unknown destinations would follow a similar path and especially with broadcasts; this is going to be not only the great performance on the switches and cause extra unwanted traffic on the network, but it will also be processed by all machines, all broadcasts, and so it will also cause performance degradation on the servers and PCs connected to the network.

Spanning Tree Protocol

The main solution to detect and prevent loops is the spanning tree protocol. Also known as STP, spanning tree will manage all the links and through a certain process, will prevent loops by blocking ports and logically get rid of the loop. This does not mean that port is disconnected or disabled. It is simply blocked from the perspective of the spanning tree, and by blocking that port,. you prevent the loop and prevent broadcast storms and the problems resulting from loops.

The way it works is spanning tree will name a dictator of the network, a manager of the network, called the root bridge. The root bridge will send what is known as BPDUs or bridge protocol data units to create a consistent tree- shaped structure on the network. What that means is that root bridge will be selected and this selection is dynamic. The route with the highest priority will win the election and that means that if that route goes down,. other switches can take over and become roots. Tthe second part of the process is the election of the non designated port, which is a port that will be blocked to prevent the loop. That non-designated port is selected as the one farthest away from the root,. and this is based on costs of interfaces and costs related to each switch in the network. Eventually, one port will be blocked and those switches will know not to transmit traffic on that port,. therefore creating a loop free- topology, and therefore reducing the likelihood of broken storms and other problems related to redundancy in the network.

Our Recommended Premium CCNA Training Resources

These are the best CCNA training resources online:

Click Here to get the Cisco CCNA Gold Bootcamp, the most comprehensive and highest rated CCNA course online with a 4.8 star rating from over 30,000 public reviews. I recommend this as your primary study source to learn all the topics on the exam.

Want to take your practice tests to the next level? AlphaPreps purpose-built Cisco test engine has the largest question bank, adaptive questions, and advanced reporting which tells you exactly when you are ready to pass the real exam. Click here for your free trial.